When markets become dysfunctional, some form of intervention becomes critical to reinstate the legitimacy of laissez-faire economics and restore public trust in government. Historically, this intervention has often taken the form of statutory regulation, authorising the government to command regulatees to achieve a specified regulatory outcome through certain prescribed means, and issuing sanctions against non-compliance. Most environmental protection laws fall under the category of this command-and-control (CAC) style of regulation.

In the analogue world, where firms moved slow and new markets took decades to develop, lawmakers, and statutory regulators had enough temporal margins to work out the ‘perfect’ CAC prescriptions to rectify any market dysfunctionality. For all we know, this has ceased to be true in today’s hyper-connected world, where firms move at the speed of gigabytes and new business models emerge overnight, giving rise to a serious pacing problem in the CAC style of regulation for governing risks from rapid technological advancements.

Government-led industry self-regulation is then proposed as an alternative regulatory approach to harness opportunities for AI-enabled productivity gains and public benefit, while also addressing critical public safety concerns around AI adoption.

This article analyses the ineptness of CAC regulations against the backdrop of breakneck innovation in artificial intelligence (or AI)-based technologies and the dynamically expanding universe of risks and benefits associated with their accelerated adoption. Government-led industry self-regulation is then proposed as an alternative regulatory approach to harness opportunities for AI-enabled productivity gains and public benefit, while also addressing critical public safety concerns around AI adoption.

Why CAC regulations can’t keep up?

The CAC regulations typically end up prescribing significant compliance obligations on regulatees while also demanding the creation of brand-new enforcement and compliance monitoring mechanisms that could significantly burden the public exchequer. Therefore, CAC regulators must exercise great caution in defining the subject and scope of regulation with due precision to avoid under- or over-regulation, and in a manner that is clearly comprehensible to both regulatory authorities and regulatees to ensure satisfactory compliance. To credibly do so, CAC regulators must demonstrate an accurate understanding of what they are seeking to regulate, and the competence to deliver prescriptions that could adapt to temporal variations in the regulatory subject and scope. If any of these conditions are not met, the resulting CAC regulation will most likely prove to be sub-optimal or ineffective in achieving its intended objective.

CAC regulators must demonstrate an accurate understanding of what they are seeking to regulate, and the competence to deliver prescriptions that could adapt to temporal variations in the regulatory subject and scope.

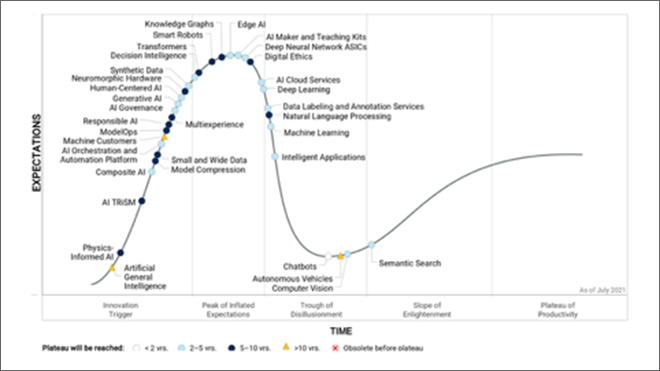

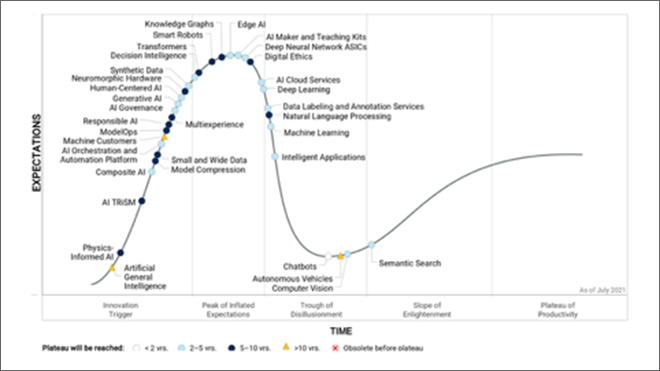

AI-based technologies are evolving at a breakneck pace, with “an above average number of technologies on the Hype Cycle (see Figure 1) reaching mainstream adoption within two to five years,” according to Gartner. The extremely fast-evolving nature of the AI-innovation landscape strongly disfavours any anticipatory recognition of a finite set of distinct use-cases and the universe of associated risks and benefits associated with their adoption, in a timely and accurate manner. This gap between advancements in AI and optimal regulatory responses to steer them in the right direction becomes even wider in low- and middle-income countries, where regulatory institutions continue to grapple with resource crunches.

Figure 1: Hype Cycle for Artificial Intelligence, 2021

Source: Gartner (September 2021)

Therefore, it is not hard to imagine why any form of CAC regulation aimed at managing risks from AI adoption is likely to have its subject and scope ill-defined— the apparent ambiguities in the definitions of AI and its several use-cases under the European Commission’s proposed Artificial Intelligence Act, for instance, have already garnered much criticism from both civil society organisations and the technical community. Such instances of regulatory miscommunication would inexorably lead to under- or over-regulation of AI innovation and enterprise activities and inducement of regulatory uncertainty or malaise amongst industry actors. The resulting sub-optimal regulatory regime for AI could seriously damage the growth and scaling prospects of the AI industry by stifling investment and innovation, with missed opportunities for AI-enabled productivity gains and public benefit, while also under-serving critical public safety concerns around AI adoption as risky use-cases fall outside the regulatory subject and scope.

What’s the alternative regulatory approach?

The pacing problem in AI regulation implores the need for a robust government-led industry self-regulation (GIS) regime that could adaptively respond to risks arising from AI adoption. Under the GIS arrangement, the government, via rigorous consultations with all stakeholders, would define regulatory goals or principles, and refrain from prescribing the means and methods to achieve them; instead, the industry would take on that responsibility by formulating suitable standards and codes of conduct, which the government may choose to then certify. The GIS arrangement holds immense potential to perfectly combine government oversight with industry expertise to guide responsive and agile interventions in AI-led markets, equitably balancing the interests of private innovation and enterprise with that of public safety.

Yet, critics could dispute the optimality of this regulatory approach by citing a conflict generally presumed between private firms’ profit motives and public interest in any industry self-regulation arrangement. Under this view, there is always a fair possibility of regulatory capture by firms failing to realise incentives to self-regulate effectively, leading to unfavourable or even fatal consequences for the public. For instance, where the cost of adoption of a certain safety standard for an AI-based product could hurt the firm’s bottom line, and the firm perversely manages to cut costs by launching the unstandardised version of the product into the market, the prevailing industry self-regulation arrangement will have clearly failed.

The AI industry has become increasingly aware of the existential risks from widespread public censure of unethical applications of AI in today’s hyper-connected world.

However, this classical critique of industry self-regulation, though valid per se, cannot be tenably levelled against the proposed GIS arrangement for responsible AI adoption for three principal reasons:

First, the proposed regulatory approach is fundamentally predicated on the adoption of a clear-cut framework for reporting industry practices to the government, with a view to demonstrate the AI industry’s due diligence in pursuing self-regulation in alignment with the regulatory goals and principles for responsible AI adoption laid down by the government. This is unlike a pure self-regulation arrangement without any form of government involvement. Put simply, in the proposed self-regulation arrangement, the fox does not remain in full charge of the hen house. Therefore, apprehensions around inherent misalignment between private incentives and public interest are allayed via this arrangement, especially so long as the government remains committed to facilitating public engagement on critical regulatory questions concerning the ethics of AI adoption.

Second, strong market incentives for responsible AI adoption are becoming apparent. AI-led enterprises are recognising the medium- to long-term value for their shareholders from strategic investments in risk assessment and mitigation tools and resources, and strengthening corporate governance structures for end-to-end adoption of responsible AI best practices that prioritise user trust and safety. This value for AI-led enterprises and their shareholders is expected in the form of competitive advantage through enhanced product or service quality, better talent and customer acquisition and retention, and an upper hand in competitive bidding.

Third, globally, a good number of civil society organisations and interdisciplinary think tanks have hit the ground running to track and report emergent risks from AI adoption, propose rigorous measures for their mitigation, and make sure that fairness, transparency, and accountability remain listed as the highest corporate priorities for all AI-led enterprises. With this, the AI industry has become increasingly aware of the existential risks from widespread public censure of unethical applications of AI in today’s hyper-connected world. It realises that any instance of irresponsible behaviour—whether proven or perceived—could potentially decimate the government’s confidence in industry self-regulation of AI in the wink of an eye, forcing external enforcement measures by the government that would likely be prescriptive and sub-optimal for its growth. This constant, implicit threat of facing unfavourable regulatory obligations—dubbed by scholars as the ‘shadow of authority’—is arguably a formidable motivating factor in promoting desirable firm behaviour. A good case in point could be Meta’s recent announcement to launch a ‘Personal Boundary’ feature on its virtual reality platform—Horizon Worlds—in response to public outcry on social media over claims of sexual harassment on the platform.

Conclusion

Even as GIS should serve as the default arrangement for regulating risks from AI adoption, it might be considered ill-suited for certain contexts. For instance, in exceptional use-cases, where the risk of harm from the deployment of a certain AI-based application is probable and significant, with constitutional fundamental rights at stake, for e.g., predictive policing, it may be advisable for the government to rather take the route of codifying essential safety protocols and issuing stringent sanctions against non-compliance—as also recommended by policy think tanks like NITI Aayog in India.

It realises that any instance of irresponsible behaviour—whether proven or perceived—could potentially decimate the government’s confidence in industry self-regulation of AI in the wink of an eye, forcing external enforcement measures by the government that would likely be prescriptive and sub-optimal for its growth.

However, even then, detailed inputs from industry stakeholders must be sought proactively to guide the regulatory design of any such intervention to ensure inter alia precision and intelligibility of the regulatory subject and scope, and practicability of mandated compliance procedures. As previously discussed, these inputs will remain critical to hedge the risk of negative fallouts for AI-led markets and the public from prescriptive regulation of AI adoption.

No wonder even outspoken sceptics of industry self-regulation like former Lead of Ethical AI at Google, Margaret Mitchell have emphasised the indispensable value of industry insights in designing an optimal regulatory regime for responsible AI adoption: “…it’s possible to do really meaningful research on AI ethics when you can be there in the company, understanding the ins and outs of how products are created. If you ever want to create some sort of auditing procedure, then really understanding — from end to end — how machine learning systems are built is really important.”

The views expressed above belong to the author.

The views expressed above belong to the author(s). ORF research and analyses now available on Telegram! Click here to access our curated content — blogs, longforms and interviews.

PREV

PREV