You walk across a camera mounted on the traffic pole. The long white camera spots you, clicks your picture, and then sends it to a facial recognition system placed at the police station. The software breaks down your face geometry, locates all facial features, matches it with a database to identify you, and sends information to the police. The police then trace you and engage with you. All of this happens in a short of 15 minutes. Welcome to the world of public security and surveillance using Facial Recognition Technology (FRT).

The above paragraph can appear scary or exciting, depending on which logic of action you value more. In their

seminal piece in 1988, March and Olsen described two logics of action, namely the ‘

logic of appropriateness’ and the ‘

logic of consequence’. The challenge of regulating FRT is that there is a difficult balancing act to be done between these two logics of action. As the case of the United Kingdom (UK) shows, different stakeholders might emphasise different logics of action, and reconciling them is not always easy.

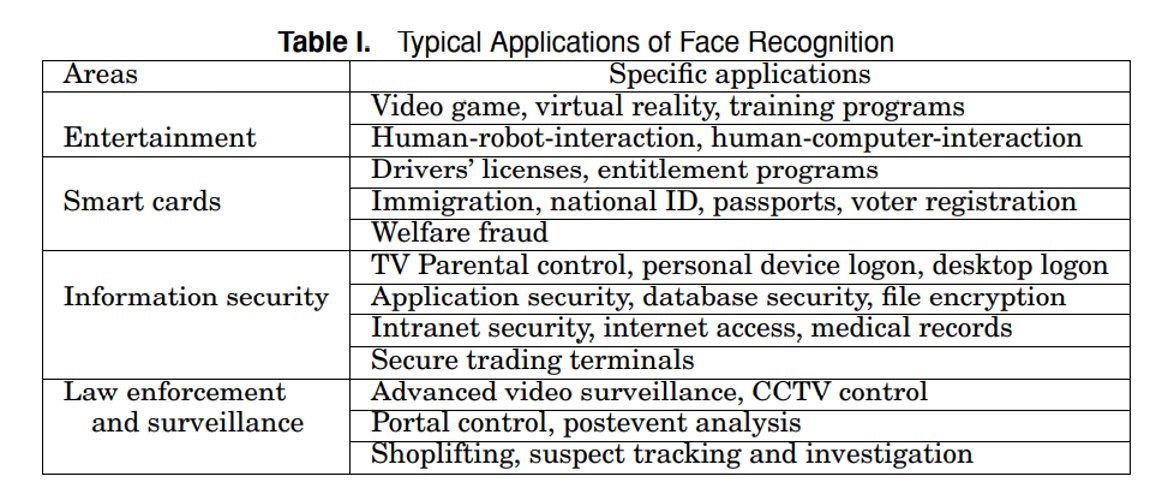

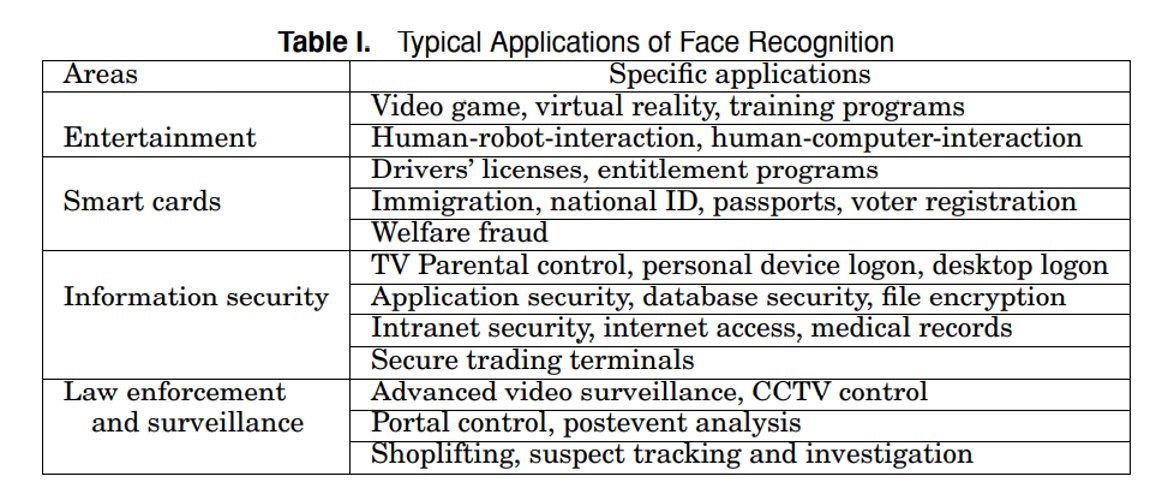

FRT is a mature technology with

multiple uses across fields (see Table 1). It is used for two purposes mainly—verification and identification. Verification entails matching faces one to one with a database of images, whereas identification entails matching one-to-many to find a possible match. While verification is common and widely used, for instance, when we unlock our smartphone, the use of FRT for identification by law enforcement agencies has been heavily disputed.

Table 1 Typical Applications of FRT

The dispute arises from the difference in emphasis between the two logics of actions. Those who use a ‘

logic of consequence’ focus on the

ability of FRT to aid in different policing activities, ranging from crime prevention to detecting missing individuals. They emphasise the cost-effectiveness of FRT and its usefulness in identifying individuals in crowds,

precisely without the individual’s cooperation. Such stakeholders ask, “what are the consequences of using FRT?” and “how do these consequences compare with alternatives to FRT?”.

On the contrary, most

civil society organisations see FRT’s usage as violation of rights. Guided by the ‘

logic of appropriateness’,

they believe that public spaces should allow free movement of citizens and any information collected on individuals should not be done without consent. Such

stakeholders have constantly highlighted the racial discrimination faced by minorities and people of color in the US, arguing that FRT can be used for racial profiling and perpetuating racial bias by an abusive and biased police.

The case of the UK illustrates this point clearly. The

College of Policing in a recent document has provided guidelines for the safe use of FRT by public agencies, justifying its usage based on the logic of consequence. Civil society organisations in the UK are

unsatisfied with such guidelines because they neither believe that the state should have the power to continuously surveil their citizens nor do they think that the guidelines released by the College of Policing adequately address many of the concerns that have been raised in recent years.

The 2020

R Bridges Vs Chief Constable of South Wales Police Case highlighted unlawful FRT use in a sports arena by the South Wales Police in 2020. It revealed wide discretion available to police officers in watch list construction and deployment location, thus breaching “the rights and freedoms of data subjects”. The FRT use also violated the Equality Act 2010, since no test on technology bias against race, gender, or age, was conducted. This case was a landmark one as it brought stakeholders who were using different logics of action, to stand against each other in the court.

Civil society organisations in the UK are unsatisfied with such guidelines because they neither believe that the state should have the power to continuously surveil their citizens nor do they think that the guidelines released by the College of Policing adequately address many of the concerns that have been raised in recent years.

It must be noted here that no stakeholder completely believes in using only one logic of action. For instance, law enforcement agencies might not agree with the use of

FRT in schools because they perceive it as inappropriate, whereas they can be completely fine with its usage for crime prevention or investigation. Similarly, civil society and the public might have higher trust in the use of FRT by the police rather than say by private organisations (e.g., shopping malls). Overall, what matters is understanding the pros and cons of the technology using both logics of action and then making an informed judgment on its use.

As policymakers

are rapidly deploying FRT in India, active concerns remain regarding the privacy of citizens and the possible targeting of vulnerable communities. Unlike the UK, India does not have a private data protection law, and there are no guidelines or laws governing the use of FRT. A debate in the Parliament is essential to flesh out the concerns surrounding the use of FRT, to avoid citizen mistrust and adversarial litigation. An uninformed public, subjected to plausible bias from technology without their consent, is the last thing our democracy currently needs.

Both logics of action are important and balancing them is essential. From the

logic of consequence, policymakers should think carefully about the

biased results of FRT and the

possibility of abuse by the police. They must also ask if alternative ways (e.g., card-based verification) of doing the same task effectively are available. From the

logic of appropriateness, the policymakers should do citizenss surveys,

like the one done in the UK. They should understand what the public thinks is an appropriate use of FRT and communicate their stand as clearly as possible to build trust. Technology should neither scare nor excite, rather it should be investigated objectively if it can solve the policy problem, given the context of a society.

The views expressed above belong to the author(s). ORF research and analyses now available on Telegram! Click here to access our curated content — blogs, longforms and interviews.

You walk across a camera mounted on the traffic pole. The long white camera spots you, clicks your picture, and then sends it to a facial recognition system placed at the police station. The software breaks down your face geometry, locates all facial features, matches it with a database to identify you, and sends information to the police. The police then trace you and engage with you. All of this happens in a short of 15 minutes. Welcome to the world of public security and surveillance using Facial Recognition Technology (FRT).

The above paragraph can appear scary or exciting, depending on which logic of action you value more. In their

You walk across a camera mounted on the traffic pole. The long white camera spots you, clicks your picture, and then sends it to a facial recognition system placed at the police station. The software breaks down your face geometry, locates all facial features, matches it with a database to identify you, and sends information to the police. The police then trace you and engage with you. All of this happens in a short of 15 minutes. Welcome to the world of public security and surveillance using Facial Recognition Technology (FRT).

The above paragraph can appear scary or exciting, depending on which logic of action you value more. In their

PREV

PREV