First, the country needs an exam watchdog along the lines of UK’s Ofqual (Office of Qualifications and Examinations Regulation), that will keep an eye on the assessment standards across the multitude of examining bodies.

Second, exam boards must report not only absolute scores but also the relative ordering indicated by either the percentile score or the z-score and, ideally, a normalised grade point. Raw scores often lead to an arbitrary level of precision, ignoring the subjectivity involved in assessment.

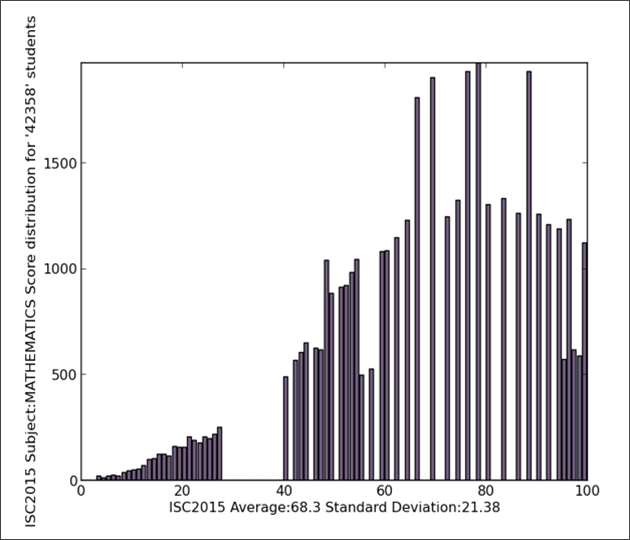

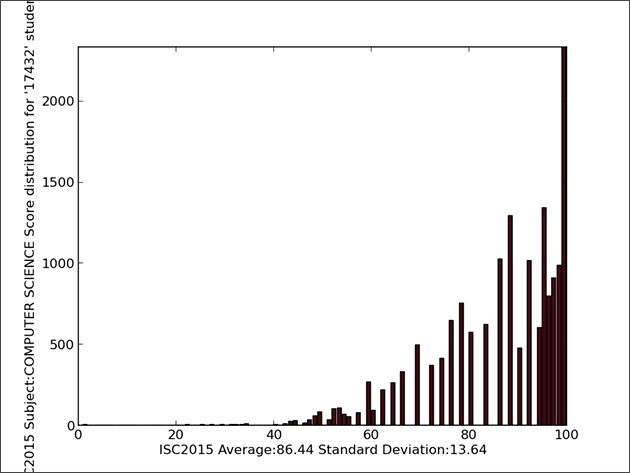

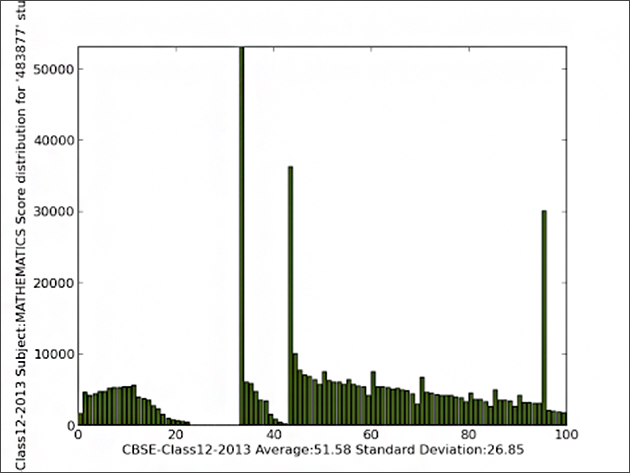

A grade-point system has the potential to be helpful as a coarser filter. But it should be ensured that there is consistency in the grading schemes of various boards. Moreover, not more than two to three percent of the candidates should attain the highest scores, to distinguish genuine excellence from the rest. The resolution, and hence the relevance, of the examination is lost if lakhs (or even hundreds) attain the highest grade. [10]

Third, there should be a general scholastic test designed along the lines of the SAT (Scholastic Aptitude Test) in the United States, whose results can also be used to benchmark the performance of students across boards. The results of such a test may be used by central universities to create an incentive for students to opt for it. This can be a board-agnostic “pass certificate” to help identify candidates who actually have basic reading, writing and numeracy skills. For political and commercial reasons, India’s school exams fail to do this.

Fourth, a one-time ‘correction’ (stopping of marks inflation) is needed, to be implemented by all the exam boards together, rather than gradually over a few years or by different exam boards in different years. Moreover, since the number of examinees is huge, the exams need to have a good proportion of multiple choice questions that can be marked electronically using OMR answer sheets (instead of all questions asking for narrative answers), to avoid poor quality script marking due to a shortage of examiners.

Finally, in the course of admitting students, all colleges and universities must have the autonomy to conduct a layer of assessment beyond the school examinations. Currently, many colleges such as Ramjas and Shri Ram College of Commerce,[11] for example, are unable to do this, as this degree of administrative autonomy generally requires a minority status. Therefore, these colleges find themselves helpless in a situation where a disproportionate fraction of their seats are occupied by students from boards that inflate scores the most.

About the authors

Prashant Bhattacharji is a Data Analyst ([email protected]).

Geeta Kingdon is a Professor at UCL Institute of Education ([email protected])

Endnotes

[1] FP Staff. “CBSE moderation row: Board awarded up to 11 extra marks in this year’s Class 12th exams.” Firstpost, 2 June 2017. Accessed 17 July 2017. http://www.firstpost.com/india/cbse-moderation-row-board-awarded-up-to-11-grace-marks-in-this-years-class-12th-exams-3508967.html.

[2] PTI. “Bihar Board Class 12 results 2017 sees 64% students fail in their exams.” Livemint, 30 May 2017. Accessed 17 July 2017. “http://www.livemint.com/Education/HbiMixK07K2LknJIPPZ6VK/Bihar-Class-12-Board-results-2017-sees-64-students-fail-in.html.

[3] Deep, Jagdeep Singh. “Punjab Class XII results: No grace marks lead to 14 per cent dip in pass percentage.” The Indian Express, 14 May 2017. Accessed 17 July 2017. http://indianexpress.com/article/india/punjab-class-xii-results-no-grace-marks-lead-to-14-per-cent-dip-in-pass-percentage-4654738/.

[4] FE Online. “PSEB Results: Balbir Singh Dhol quits as chairman after CMO asked to resign following poor board results.” The Indian Express, 26 May 2017. Accessed 17 July 2017. http://www.financialexpress.com/education-2/pseb-10th-result-2017-pseb-ac-in-balbir-singh-dhol-quits-as-chairman-after-cmo-asked-to-resign-following-poor-board-examination-results/686413/.

[5] Barrow, A. E. T. “Principles of scaling and the use of grades in examinations.” Teacher Education (India), January 1971. Accessed 17 July 2017. http://www.teindia.nic.in/mhrd/50yrsedu/g/52/76/52760I01.htm.

[6] Bhattacharji, Prashant. “Exposing CBSE and ICSE: A follow-up (2015 Results) after 2 years.” The Learning Point, June 2015. Accessed 17 July 2017. http://www.thelearningpoint.net/home/examination-results-2015/exposing-cbse-and-icse-a-follow-up-after-2-years.

[7] Das, Debarghya. “Hacking into the Indian Education System.” Quora, 4 June 2013. Accessed 17 July 2017. https://deedy.quora.com/Hacking-into-the-Indian-Education-System.

[8] Bhattacharji, Prashant. “A Shocking Story of Marks Tampering and Inflation: Data Mining CBSE Scoring for a decade.” The Learning Point, June 2013. Accessed 17 July 2017. http://www.thelearningpoint.net/home/examination-results-2013/cbse-2004-to-2014-bulls-in-china-shops.

[9] Kunju S., Shihabudeen. “CBSE, State Boards Agree To Scrap ‘Marks Moderation’, Degree Cut-Offs To Drop.” NDTV, 25 April 2017. Accessed 17 July 2017. http://www.ndtv.com/education/cbse-state-boards-agree-to-scrap-marks-moderation-degree-cut-offs-to-drop-1685742.

[10] Sebastian, Kritika Sharma. “Over 1.6 lakh score ‘perfect 10’ CGPA.” The Hindu, 29 May 2016. Accessed 17 July 2017. http://www.thehindu.com/news/cities/Delhi/over-16-lakh-score-perfect-10-cgpa/article8662008.ece.

[11] Gohain, Manash. “Tamil Nadu students grab up to 80% of seats in SRCC so far.” The Times of India, 2 July 2016. Accessed 17 July 2017. http://economictimes.indiatimes.com/industry/services/education/tamil-nadu-students-grab-up-to-80-of-seats-in-srcc-so-far/articleshow/53017636.cms.

PDF Download

PDF Download

PREV

PREV